Data storage

The first obstacle that the researchers face is limited data storage and sharing. When running continuously, top of the line systems produce terabytes worth of data each day, which needs to be stored in a reliable manner. For instance, the three-dimensional (3D) EM field has rapidly grown in the last few years, and so has the number, size and the quality of images used to derive reconstruct volume samples. The produced images need to be registered and aligned in relation to each other, in three dimensions, which requires a lot of computational power, manual validation and adjustment.

Data sharing

The second challenge is data sharing: improving the processes of data sharing is extremely important for the microscopy field as it allows researchers to analyze data collaboratively, and re-use datasets to examine different properties of the imaged material. Care must then be taken to ensure that the shared data follows agreed-upon standards and conventions, so that it is accessible through common tools [1], and that metadata (experimental details, imaging settings) is shared in a common, easily understandable format.

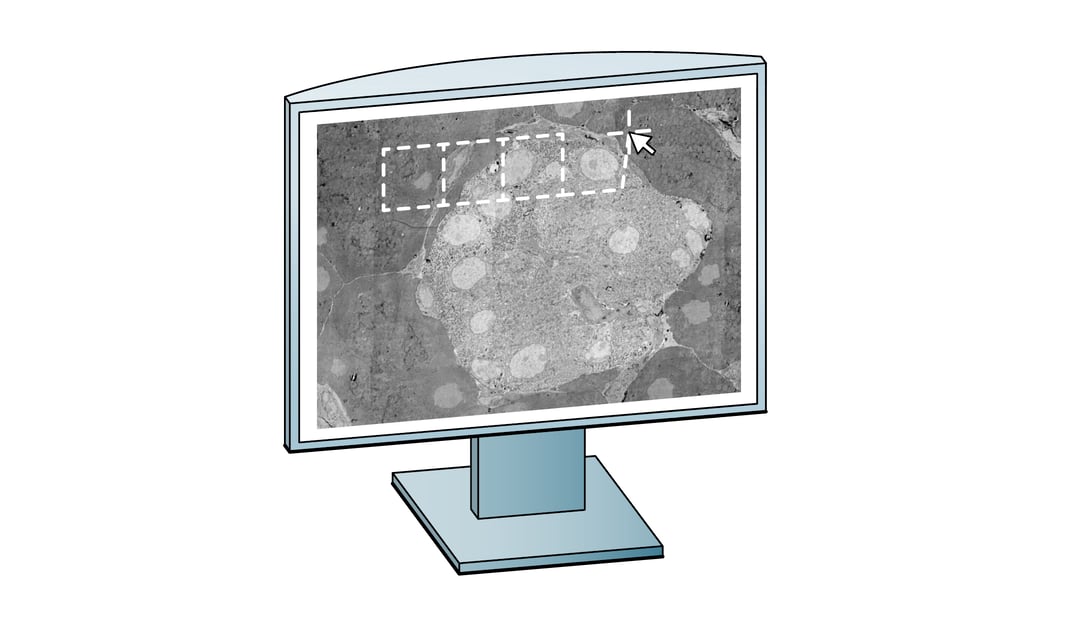

Visualization

The third challenge is visualization: users want to look at the images in an easy way. Specialized tools have been developed for volumetric data, such as the publicly available Neuroglancer [2] and CATMAID [3]. Datasets can be examined without the need for the client to download the data in its entirety, as only the requested content is streamed from central storage to the user. Such server-client models enable easier access to public data, which has historically been a challenge in large-scale projects. Thanks to these tools, users can navigate large multidimensional biological image datasets and collaboratively annotate features in them [3,4], which can then easily be shared for publication and verification.

Data analysis

The final challenge is related to data analysis. Manual segmentation and annotation quickly become prohibitively laborious in larger volumes, revealing a need for reliable automation. Unfortunately, automatic segmentation was fairly inaccurate until recently, so manual proofreading and annotation was essential in achieving error free analysis. Early connectomics datasets therefore often required multiple man-years of labor to analyze only small parts of the sample volume [3].

Improvements in computing power and machine learning are now revolutionizing this field: automated segmentation tools are becoming fast and accurate enough to do many tasks automatically, opening the way towards annotating the connectome of larger model animals. Although the computational costs are still substantial, one such approach has been applied to trace neurons within the zebra finch brain, where it was able to automatically segment neurons with long, error-free neurite paths [5]. Further developments of these tools will help minimize the workload of current semi-automated workflows, which still depend on human labour to validate error-prone segmentation steps [4].

Next steps

Volume EM is going through an exciting time, where electron microscopes are getting faster and more efficient, which makes the improvement of data storage, visualization and automated analysis essential for larger projects. The resulting data can be analyzed faster and easily be made public for review or collaborative work. Learn more about Delmic’s upcoming FAST-EM system for large-scale imaging projects.

References

[1] Patwardhan, A., Carazo, J.M., Carragher, B., et al. Data management challenges in three-dimensional EM. Nat Struct Mol Biol. 2012; 19(12):1203-1207. doi:10.1038/nsmb.2426

[2] Neuroglancer, a WebGL-based viewer for volumetric data https://github.com/google/neuroglancer

[3] Saalfeld, S., Cardona, A., Hartenstein, V., Tomančák, P. CATMAID: collaborative annotation toolkit for massive amounts of image data. Bioinformatics, 2009; 25(15): 1984–1986. doi.org/10.1093/bioinformatics/btp266

[4] Takemura, S., Bharioke, A., Lu, Z. et al. A visual motion detection circuit suggested by Drosophila connectomics. Nature. 2013; 500, 175–181. doi.org/10.1038/nature12450

[5] Januszewski, M., Kornfeld, J., Li, P.H. et al. High-precision automated reconstruction of neurons with flood-filling networks. Nat Methods. 2018; 15, 605–610. doi.org/10.1038/s41592-018-0049-4

.png)